This article was first published on SearchServerVirtualization.TechTarget.com.

Managing VM Sprawl with a VM Library

A compelling reason for most organizations to look into virtualization is to help manage “server sprawl” through the use of datacenter consolidation. By running many virtual machines on the same hardware, you can realize significant cost savings and you can decrease administration overhead. But, there’s a catch: Since VMs are so easy to build, duplicate and deploy, many organizations are realizing that they are running into a related problem: “VM sprawl”.

Once they’re aware that the technology is available, users from throughout the organization often start building and deploying their own VMs without the knowledge of their IT departments. The result is a plethora of systems that don’t follow IT guidelines and practices. In this article, I’ll describe some of the problems that this can cause, along with details on how IT departments can reign in the management of virtual machines.

Benefits of VM Standardization

With the rise in popularity of virtualization products for both workstations and servers, users can easily build and deploy their own VMs. Often, these VMs don’t meet standards related to the following areas:

- Consistency: End-users rarely have the expertise (or inclination) to follow best practices related to enabling only necessary services and locking down their system configurations. The result is a wide variety of VMs that are all deployed on an ad-hoc basis. Supporting these configurations can quickly become difficult and time-consuming.

- Security: Practices such as keeping VMs up-to-date and applying the principal of least privilege will often be neglected by users who deploy their “home-grown” VMs. Often, the result is VMs that are a security liability and that might be susceptible to viruses, spyware, and related problems that can affect machines throughout the network.

- Manageability: Many IT departments include standard backup agents and other utilities on their machines. Users generally won’t install this software, unless it’s something that they specifically need.

- Licensing: In almost all cases, operating systems and applications will require additional licenses. Even when end-users are careful, situations that involve client access licenses can quickly cause a department to become non-compliant.

- Infrastructure Capacity: Resources such as network addresses, host names, and other system settings must be coordinated with all of the computers in an environment. When servers that were formerly running only a few low-load application are upgraded, they tend to draw more power (and require greater cooling). IT departments must be able to take all of this information into account, even when users are creating their own VMs.

Creating a VM Library

One method by which organizations can address problems related to “VM sprawl” is to create a fully-supported set of base virtual machine images. These images can follow the same rigorous standards and practices that are used when deploying physical machines. Security software, configuration details, and licensing should all be taken into account. Procedures for creating new virtual machines can be placed on an intranet, and users can be instructed to request access to virtual hard disks and other resources.

Enforcement is an important issue, and IT policies should specifically prohibit users from creating their own images without the approval of IT. This will allow IT departments to keep track of which VMs are deployed, along with their purpose and function. Exceptions might be made, for example, when software developers or testers need to create their own configurations for testing.

Designing Base VM Images

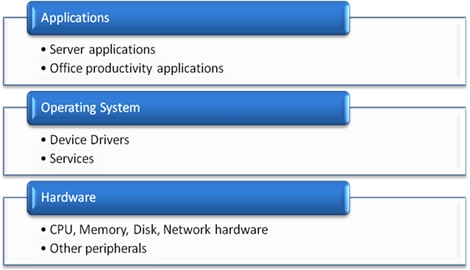

The process of determining what to include in a base VM image can be a challenge. One goal should be to minimize the number of base images that are required, in order to keep things simple and manageable. Another goal is to try to provide all of the most commonly-used applications and features in the base image. Often, these two requirements are at odds with each other. Figure 1 provides an example of some typical base images that might be created. Base images will need to be maintained over time, either through the use of automated update solutions or through the manual application of patches and updates.

Figure 1: Sample base VM images and their contents.

Supporting Image Duplication

With most virtualization platforms, the process of making a duplicate of a virtual machine image is as simple as copying one or a few files. However, there’s more to the overall process. Most operating systems will require unique host names, network addresses, security identifiers, and other settings. IT departments should make it as easy as possible for users to manage these settings, since conflicts can cause major havoc throughout a network environment. One option is for IT departments to manually configure these settings before “handing over” a VM image to a user. Another option is to use scripting or management software to make the changes. The specific details will be operating system-specific, but many operating systems offer tools that can be used to handle the deployment of new machines. One example is Microsoft’s Desktop Deployment Center which includes numerous utilities for handling these settings (note that most utilities should work fine in virtual machines, even if support for virtualization is not explicitly mentioned).

Building Disk Hierarchies

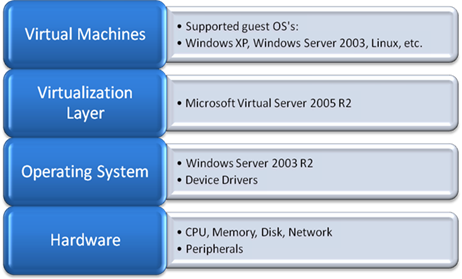

Many server virtualization platforms support features that allow for creating virtual hard disks that are based on other virtual hard disks. Remembering the goal of minimizing the number of available images while still providing as much of the configuration as possible, it’s possible to establish base operating systems and then add on “options” that users might require. Figure 2 provides an example for a Windows-based environment.

Figure 2: An example of a virtual hard disk hierarchy involving parent and child hard disks.

Keep in mind that there are technical restrictions that can make this process less-than-perfect. For example, a base virtual hard disk cannot be modified, so if you need to add on service packs, security updates, or new software versions, you’ll need to do that at the “child” level.

Summary

Overall, through the use of virtual machine libraries, IT departments can make the process of creating and deploying virtual machines much easier on end-users. Simultaneously, they can avoid provides with inconsistent and out-of-date configurations, just as they would with physical computers. The end-result is a win-win situation for those that are looking to take advantage of the many benefits of virtualization.