I’m currently working on a series of blog posts related to my move from Gmail to the Office 365 Preview. Overall, my experience has been really positive, and I’ll be posting the details over the next few days. Unfortunately, I have been experiencing an e-mail issue with my hosted Exchange Server instance: I have been unable to send any outbound messages for the last seven days (and counting, as of the writing of this post). The service is currently in beta with limited support options, but I wanted to share what I think should be an important consideration for an IT organization that’s considering cloud-based solutions: Customer Support.

I’m currently working on a series of blog posts related to my move from Gmail to the Office 365 Preview. Overall, my experience has been really positive, and I’ll be posting the details over the next few days. Unfortunately, I have been experiencing an e-mail issue with my hosted Exchange Server instance: I have been unable to send any outbound messages for the last seven days (and counting, as of the writing of this post). The service is currently in beta with limited support options, but I wanted to share what I think should be an important consideration for an IT organization that’s considering cloud-based solutions: Customer Support.

Cloud Services Support: Potential Problems with Problem Resolution

In cloud-based architectures, end-users and administrators are giving up significant direct control over their infrastructures and are placing a large amount of reliance in another organization’s infrastructure. That approach comes with a wide array of potential benefits, including the ability to rely on testing, well-managed infrastructure that’s managed by specialists and experts.

Ideally, all of these cloud services would be completely reliable and there would be no need for technical support. But what happens when those ideals aren’t met? When evaluating cloud solution providers, it’s extremely important to consider how issues are handled when they do occur. It’s no secret that cloud services, in general, have had a checkered past and that outages and related problems will continue to occur. Over time, systems should become more resilient to failures, but in the meantime, it’s important to have quick, knowledgeable and responsive technical support and service.

Cloud Support Options: What to Look For

In addition to security, performance, and availability, problem resolution is a big issue to consider. In the case of my own small business (which is really just me), I’m not a high-visibility customer for any provider. I don’t have any leverage when it comes to negotiating contracts, SLAs, terms of service, and support agreements. For the most part, the service offerings are a take-it-or-leave-it proposition. Still, that’s no different from the implied contract with just about every hosted service we have come to rely on these days. Things do get a little different when you’re betting your business (and revenue) on someone else’s infrastructure.

In general, IT professionals should request (or demand, if necessary) the following information as part of their cloud provider evaluation:

- Historical Record: Service providers should be able to provide details on the number, types, and frequency of issues they’ve experienced in the past. The should provide an official statement that guarantees the accuracy of this information, to the best of their knowledge. It’s all too easy for cloud providers to choose not to report or record some issues, or to find technicalities that point the finger elsewhere. If your potential cloud provider is doing this during the “honeymoon” phase (pre-sales), don’t expect a happy marriage in the future.

- Time to Resolution: Problems, of course, will always happen. So, the key is in determining how quickly and efficiently issues have been resolved. It’s easy for a service provider to state that they resolved problems within minutes or hours of having confirmed them. But what about the entire process? How long does it take to get hold of someone when there’s a potential outage? How much time, on average, do customers spend before an issue is recognized? Is the support staff highly technical and well-trained, or will they force you to perform hours of unnecessary troubleshooting before they admit to or realize a problem? If possible, test your providers reactions by calling their support staff before you need them. It’s sometimes difficult to simulate a cloud-based outage, but you can simulate client-side issues and test wait times, and time to resolution.

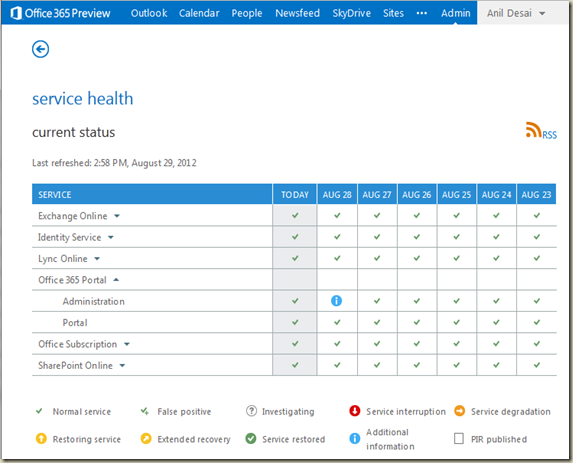

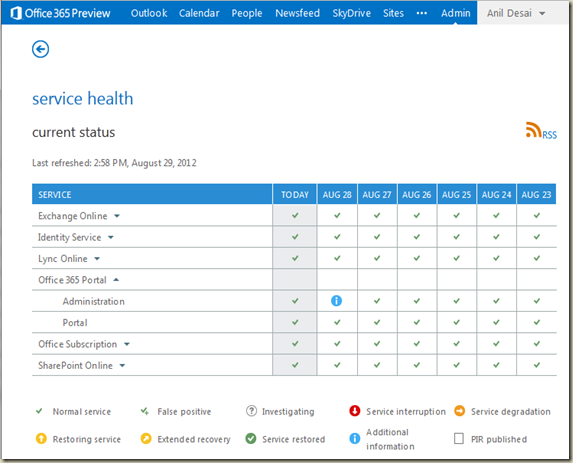

- Real-Time Status Information: Perhaps one of the most aggravating aspects of working with cloud services is being in the dark about what is going on with the infrastructure. If I have a service failure or outage in my own data center, I typically know what to do: I can collect more information, and I can attempt to isolate the cause of the problem or fail-over to other systems. With cloud infrastructures, my hands are tied. Microsoft Office 365 Preview, in my opinion, is a good step in the right direction (see screenshot below). In this summary view, you can see the last several days worth of issues, along with real-time status. But there’s a catch: Is the information accurate and valid? (In my case, described below, it most certainly isn’t – I and other users have had a serious e-mail outage for over a week now, and it’s not yet reported for the beta service). Another plus: The information icons allow users to see details about an issue. The information might be limited, but it’s definitely much better than flying completely blind.

- Service Level Agreements (SLA’s) with Meaningful Penalties: Downtime, data loss, slowdowns, and other issues can be costly, so it’s really important to get real terms that make providers pay affected users for their infrastructure outages. A simple pro-rated refund is ridiculous in these situations (for example, would you be satisfied with receiving a $300 credit for three hours of downtime during business hours?). Instead, customers should negotiate a minimal per-incident credit amount, along with rapidly-increasingly compensation for downtime or data loss. Personally, I would like to see clauses that state that, if problems can’t be resolved, a provider will pay me to go to their competitors. Cloud providers that trust their infrastructure shouldn’t balk at these terms, so make sure that their pain is at least as much as your pain when failures occur.

- Escalation Processes: Especially for knowledgeable IT staff, customers should have the option of forcing an escalation if their issues aren’t being addresses properly. In the case I mention below, my requests were all completely ignored, and I was left with nowhere else to turn (other than, perhaps, to a competing service or back to an on-premises solution). Perhaps larger customers could have called their account reps. or would have some leverage through other avenues (I contacted my Microsoft MVP Lead, who was very helpful). But customers shouldn’t have to go through all of this.

Of course, this list is just a starting point. It’s important for IT departments to get expert legal input when negotiating terms with their cloud service providers. If that makes a potential business partner sweat, it’s much better to find this out early, rather than when your organization is losing huge amounts of time and money after problems occur.

An Case in Point: An Office 365 Preview E-Mail Service Outage

While running the Office 365 Preview, I ran into an issue that seems to have affected numerous users: I was unable to send outbound e-mail. The problem went on for days before I received non-delivery notices. It affected my consulting business (customers didn’t receive important updates on production changes for my clients), and it forced me to scramble to use an account with another provider to continue with my business. Sure, I’m only one person and this is a beta service with limited support, but I think there’s a good lesson to learn here.

I don’t think I need to go into all of the technical details, other than my description of the above problem. After an hour of phone-based troubleshooting, unnecessary configuration changes (including changes to my hosted DNS settings), and at least a dozen e-mails back-and-forth, I was finally able to get Microsoft to recognize the issue. For details, you can see my post titled Outbound Mail Failures: #550 4.4.7 QUEUE.Expired; message expired ##. It took several days for users (myself included) to notice that messages were not being delivered. However, numerous users were reporting errors, and all were asked to perform basic troubleshooting that was completely irrelevant to the problem. Responses often took days and my specific, direct questions went completely ignored. I understand that limited support resources are available, but I needed some actionable advice: If services couldn’t be restored (or Microsoft was unwilling to try), I needed to start changing my DNS records and moving services elsewhere. Support staff should have realized that the problem affected multiple users, that it started at the same time for many of us, that all services were working fine before this time, and that several of the people who posted (myself included) were highly technical. The issue should have been escalated, or (at the very least) been reported as a known issue. That would have reduced some of the uncertainty. Rather, I ended up just waiting… and waiting.

Overall, it took nearly a week after the problem began for Microsoft to start looking into it. Being a cloud-based solution, regardless of my technical knowledge, there was very little troubleshooting I could do myself. The sense of helplessness is difficult enough when dealing with a single e-mail account and support limited to discussion forums. It could be catastrophic when dealing with dozens or hundreds of affected accounts.

In all fairness, the Office 365 program I’m subscribed to is currently free and is in a beta/preview mode. Microsoft was very clear that the service is not currently designed for production use (I knew that going in) and that support resources were limited. It’s not my intention to single out Microsoft (especially for a “Preview” product). I’d like to add that, in many cases, Microsoft’s support levels have been exemplary for real-world, supported production issues I ran across. (Many years ago, I even had Microsoft Product Support Services offer to create a hotfix for a SQL Server issue my company was experiencing!)

On the bright side, most of the people I talked to about this issue were knowledgeable about their infrastructure and had good troubleshooting skills. That’s something that’s often not available to small businesses. I am sure that support for live, production instances would be much more responsive. But, this experience underscores the importance of cloud provider’s technical support processes.

Lesson Learned: Always Have an Alternative

It might sound like common sense, but having fallback systems in place can be complicated, time-consuming, and tedious. However, with the ready availability of so many different online services, it makes sense to have alternatives to choose from in a pinch. In my case, I was able to fall back to using Gmail for outbound messages, and through a setup of automatic forwarding, I was able to remain up and running.

Of course, not all systems are as simple to configure. For example, a CRM backup instance, or a relational database disaster recovery implementation can take a lot of time and effort to setup and manage. Still, as the saying goes, it’s good to hope for the best and plan for the worst.

A Less Cloudy Outlook

Just to be clear, I really believe in the cloud architecture approach, and I think it will continue to have a dramatic impact on how organizations implement IT services. I understand (first-hand, in this case!) why people have their trepidations about trusting other organizations with their infrastructure. But, trust is something that is earned over time, and hopefully by deeds rather than through promises. Overall, I’m excited about the future of hosted applications, platforms, and infrastructure. For now, though, it looks like IT professionals will have to plan and manage with a partly-cloudy outlook on outsourced infrastructure.

Part of the migration process for me was making sure that, after everything was transferred successfully, that I’d be able to create a full backup of my Gmail content. It’s not that I’m worried about Gmail going away anytime in the foreseeable future. While I had the vast majority of this content organized in Outlook, I periodically deleted attachments from my messages. And, there’s always the chance that I accidentally deleted something important. My Gmail account isn’t going away, and I can always search for content through the web interface. However, I like the convenience and usability of having an indexed .PST file and raw messages if I ever needed them while offline.

Part of the migration process for me was making sure that, after everything was transferred successfully, that I’d be able to create a full backup of my Gmail content. It’s not that I’m worried about Gmail going away anytime in the foreseeable future. While I had the vast majority of this content organized in Outlook, I periodically deleted attachments from my messages. And, there’s always the chance that I accidentally deleted something important. My Gmail account isn’t going away, and I can always search for content through the web interface. However, I like the convenience and usability of having an indexed .PST file and raw messages if I ever needed them while offline.