This article was first published on SearchServerVirtualization.TechTarget.com.

Historically, organizations have fallen into the trap of thinking about security implications after they deploy new technology. Virtualization offers so many compelling benefits, that it’s often an easy sell into IT architectures. But what about the security implications of using virtualization? In this tip, I’ll present information about the security-related pros and cons of using virtualization technology. The goal is to give you an overview of the different types of concerns you should have in mind. In a future article, I’ll look at best practices for addressing these issues.

Security Benefits of Virtualization

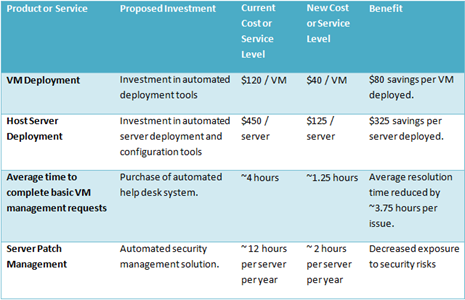

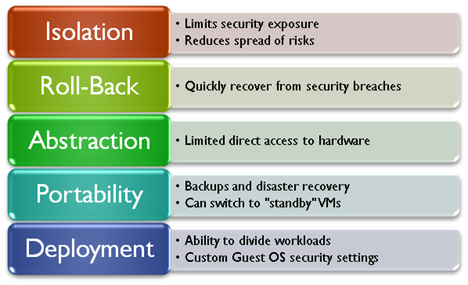

There are numerous potential benefits of running workloads with a VM (vs. running them on physical machines). Figure 1 provides an overview of these benefits, along with some basic details.

Figure 1: Virtualization features and their associated security benefits.

Since virtual machines are created as independent and isolated environments, systems administrators have the ability to easily configure them in a variety of ways. For example, if a particular VM doesn’t require access to the Internet or to other production networks, the VM itself can be configured with limited connectivity to the rest of the environment. This helps reduce risks related to the infection of a single system affecting numerous production computers or VMs.

If a security violation (such as the installation of malware) does occur, a VM can be rolled back to a particular point-in-time. While this method may not work when troubleshooting file and application services, it is very useful for VMs that contain relatively static information (such as web server workloads).

Theoretically, a virtualization product adds a layer of abstraction between the virtual machine and the underlying physical hardware. This can help limit the amount of damage that might occur when, for example, malicious software attempts to modify data. Even if an entire virtual hard disk is corrupted, the physical hard disks on the host computer will remain intact. The same is true for other components such as network adapters.

Virtualization is often used for performing backups and disaster recovery. Due to the hardware-independence of virtualization solutions, the process of copying or moving workloads can be simplified. In the case of a detected security breach, a virtual machine on one host system can be shut down, and another “standby” VM can be booted on another system. This leaves plenty of time for troubleshooting, while quickly restoring production access to the systems.

Finally, with virtualization it’s easier to split workloads across multiple operating system boundaries. Due to cost, power, and physical space constraints, developers and systems administrators may be tempted to host multiple components of a complex application on the same computer. By spreading functions such as middleware, databases, and front-end web servers into separate virtual environments, IT departments can configure the best security settings for each component. For example, the firewall settings for the database server might allow direct communication with a middle-tier server and a connection to an internal backup network. The web server component, on the other hand, could have required access via standard HTTP ports.

This is by no means a complete list of the benefits of virtualization security, but it is a quick overview of the security potential of VMs.

Potential Security Issues

As with many technology solutions, there’s a potential downside to using virtual machines for security. Some of the risks are inherent in the architecture itself, while others are issues that can be mitigated through improved systems management. A common concern for adopters of virtual machine technology is the issue of placing several different workloads on a single physical computer. Hardware failures and related issues could potentially affect many different applications and users. In the area of security, it’s possible for malware to place a significant load on system resources. Instead of affecting just a single VM, these problems are likely to affect other virtualized workloads on the same computer.

Another major issue with virtualization is the tendency for environments to deploy many different configurations of systems. In the world of physical server deployments, IT departments often have a rigid process for reviewing systems prior to deployment. They ensure that only supported configurations are setup in production environments and that the systems meet the organization’s security standards. In the world of virtual machines, many otherwise-unsupported operating systems and applications can be deployed by just about any user in the environment. It’s often difficult enough for IT departments to know what they’re managing, let alone how to manage a complex and heterogeneous environment.

The security of a host computer becomes more important when different workloads are run on the system. If an unauthorized user gains access to a host OS, he or she may be able to copy entire virtual machines to another system. If sensitive data is contained in those VMs, it’s often just a matter of time before the data is compromised. Malicious users can also cause significant disruptions in service by changing network addresses, shutting down critical VMs, and performing host-level reconfigurations.

When considering security for each guest OS, it’s important to keep in mind that VMs are also vulnerable to attacks. If a VM has access to a production network, then it often will have the same permissions as a physical server. Unfortunately, they don’t have the benefits of limited physical access, such as controls that are used in a typical data center environment. Each new VM is a potential liability, and IT departments must ensure that security policies are followed and that systems remain up-to-date.

Summary

Much of this might cast a large shadow over the virtualization security picture. The first step in addressing security is to understand the potential problems with a particular technology. The next step is to find solutions. Rest assured, there are ways to mitigate these security risks. That’s the topic of my next article, “Best Practices for Improving VM Security.”