A Brief History of [Wasted] Time

Over the last couple of decades, practical PC bottlenecks have moved. I remember a time when upgrading CPUs could provide a near-linear speed increase with respect to clock speed (does anyone remember the thrill of going from a 33MHz 486 processor to a a 486/66?). Later, keeping the processor fed became more important. Improved memory bus speeds, lower cache latency, larger cache sizes, and more RAM often provided the best performance increase. And of course, we had network issues – starting with dial-up performance. Thankfully, most of those resources are no longer the slowest components in modern PCs. In fact, CPUs have increased in performance to the point that clock speed increases give little practical benefits for most users (on the client side, at least).

Wringing some[Bottle]necks…

Over the last several years, the primary bottleneck on most of my machines (notebooks, development desktops, and music production machines) has been hard disk performance. If I was waiting for something, it was more than likely that hard drive. The high number and frequency of random I/Os often resulted in significant delays. Even with large amounts of RAM, launching programs, loading web pages, and performing builds in Visual Studio could take a lot of time. High-speed, low-latency hard disks helped a little. And, if you can stomach the risk of data loss, RAID-0 configurations could alleviate some of the pain. But, disk access remained the slow step in many processes.

One of my clients, Arrow Value Recovery (formerly, TechTurn, Inc.), was kind enough to lend me a Samsung 128GB Solid State Disk (SSD) to test. At first, I imaged my notebook Windows 7 Release Candidate installation and placed it on the SSD. I was expecting an incremental increase in performance (at least for random, small reads). The overall results, however, were amazing! Applications launched in a just a few seconds, and some basic benchmarks provided all the evidence I needed to place the new disk in my primary development machine (a Dell Dimension XPS 420 with two 500GB, 7200RPM drives). Now, after just a couple of weeks, I can’t imagine going back to “old school” physical drives.

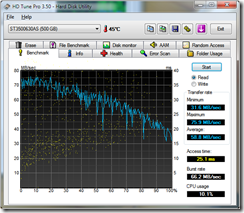

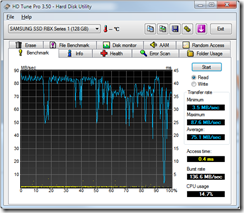

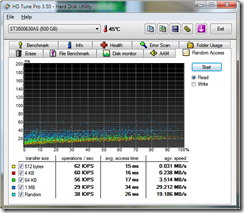

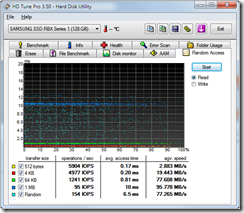

SSD’s are new to the marketplace and they’re not without significant potential drawbacks. In the coming weeks, I’ll provide some more details on the experience. For now, here are a couple of basic benchmarks created using HDTune. The basic comparison is between a Samsung 500GB, 7200 RPM hard disk (16MB cache) and the 128GB Samsung SSD. I did absolutely nothing to optimize the performance of the SSD, so consider this just a baseline.

Benchmarks

HDTune – Disk Benchmarks

Figure 1a: HDD Performance (Dell XPS 420)

Figure 1b: SSD Performance (Dell XPS 420)

HDTune – Random Access Performance

Figure 2a: HDD Performance (Dell XPS 420)

Figure 2b: SSD Performance (Dell XPS 420)

I realize that this data is completely anecdotal and unscientific, but it’s a promising start. So far, the general performance improvement from using an SSD has been the single most noticeable upgrade in several years.

More to come…

Again, I hope to post some more detailed data (with a focus on benefits for development workstations) in the coming weeks. Now if only my own sequential writing speed could match that of the SSD drive… 🙂

Update: The Engineering Windows 7 blog has a post that covers Support and Q & A for Solid-State Drives. It helps provide some technical background related to the different between random vs. sequential I/O’s and issues related to random writing.

#1 by dedonder on May 22, 2009 - 3:38 am

Quote

SSDs really run circles even around the best enterprise disks. Check out